Last week, PostHog's CI ran 575,894 jobs, processed 1.18 billion log lines, and executed 33 million tests. At that volume, even a 99.98% pass rate generates meaningful noise. We're building Mendral, an AI agent that diagnoses CI failures, quarantines flaky tests, and opens PRs with fixes. Here's what we've learned running it on one of the largest public monorepos we've seen.

But first, some context on why we're building this. Back in 2013, I was managing Docker's engineering team of 100 people, and my co-founder Andrea was the team's tech lead and lead architect. We scaled Docker's CI from a handful of builds to something that ran constantly across a massive contributor base. Even back then, flaky tests and large PRs that were painful to review were our biggest headaches. We spent a disproportionate amount of time debugging CI failures that had nothing to do with the code being shipped. That was over a decade ago, with no AI coding tools generating PRs at scale. The problem has only gotten worse since then. Mendral is the tool we wished we had at Docker.

PostHog's CI in one week

PostHog is a ~100 person fully remote team, all pushing constantly to a large public monorepo. They ship fast, and their CI infrastructure reflects that. Here's what one week looks like (Jan 27 - Feb 2):

- 575,894 CI jobs across 94,574 workflow runs

- 1.18 billion log lines (76.6 GiB)

- 33.4 million test executions across 22,477 unique tests

- 3.6 years of compute time in a single week

- 65 commits merged to main per day, 105 PRs tested per day

- 98 human contributors active in one week

- Every commit to main triggers an average of 221 parallel jobs

On their busiest day (a Tuesday), they burned through 300 days of compute in 24 hours. These are not the numbers of a team with a CI problem. These are the numbers of a team that moves extremely fast and takes testing seriously.

The physics of scale

At this velocity, flaky tests become an inevitable force of nature. PostHog's test pass rate is 99.98%, which is genuinely excellent across 22,477 tests. But at 33 million weekly test executions, even a tiny failure rate produces a meaningful amount of noise. That's just math.

About 14% of their total compute goes to failures and cancellations, and roughly 3.5% of all jobs are re-runs. This isn't a PostHog-specific issue. Any team operating at this pace, with this test coverage, will hit the same dynamics. The question is how you deal with it.

Most teams just live with it. Engineers learn which tests are flaky, they re-run, they move on. It works up to a point. But when you have 98 active contributors and 221 parallel jobs per commit, the overhead of investigating and re-running adds up quietly. The PostHog team recognized this early and decided to be proactive about it, which is how we started working together.

What actually happens with flaky tests at scale

A test that passes 95% of the time sounds mostly fine. But when your CI runs 221 jobs per commit and you're merging 65 commits per day to main, that test is failing multiple times daily. It's not the individual failure that hurts. It's the investigation. Someone sees red CI, drops what they're doing, opens the logs, tries to figure out if their change broke something or if it's a known flake. Then they re-run. Maybe it passes. Maybe it doesn't. Maybe they ping someone on Slack. Maybe three people are looking at the same failure independently.

At 10 engineers, a flaky test is annoying. At 100, it's a tax on everyone's productivity.

How Mendral works at PostHog

Our agent is a GitHub App. You install it on your repo (takes about 5 minutes), and it starts watching every commit, every CI run, every log output. Here's what it does concretely:

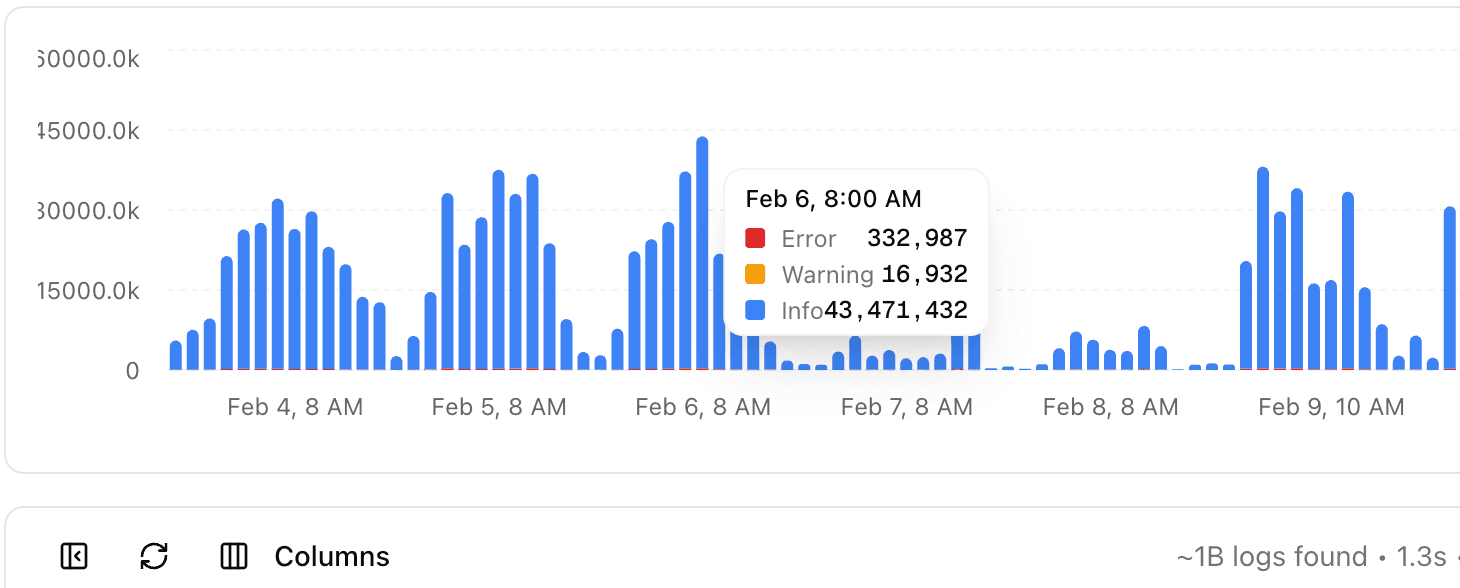

Ingesting logs at scale. We built a log ingestion system that processes PostHog's billion-plus weekly log lines so the agent can search and correlate failures quickly. Without this, you can't do meaningful diagnosis. You need to be able to look at a failure, pull the relevant logs, and cross-reference with other recent failures to determine if it's a flake or a real regression.

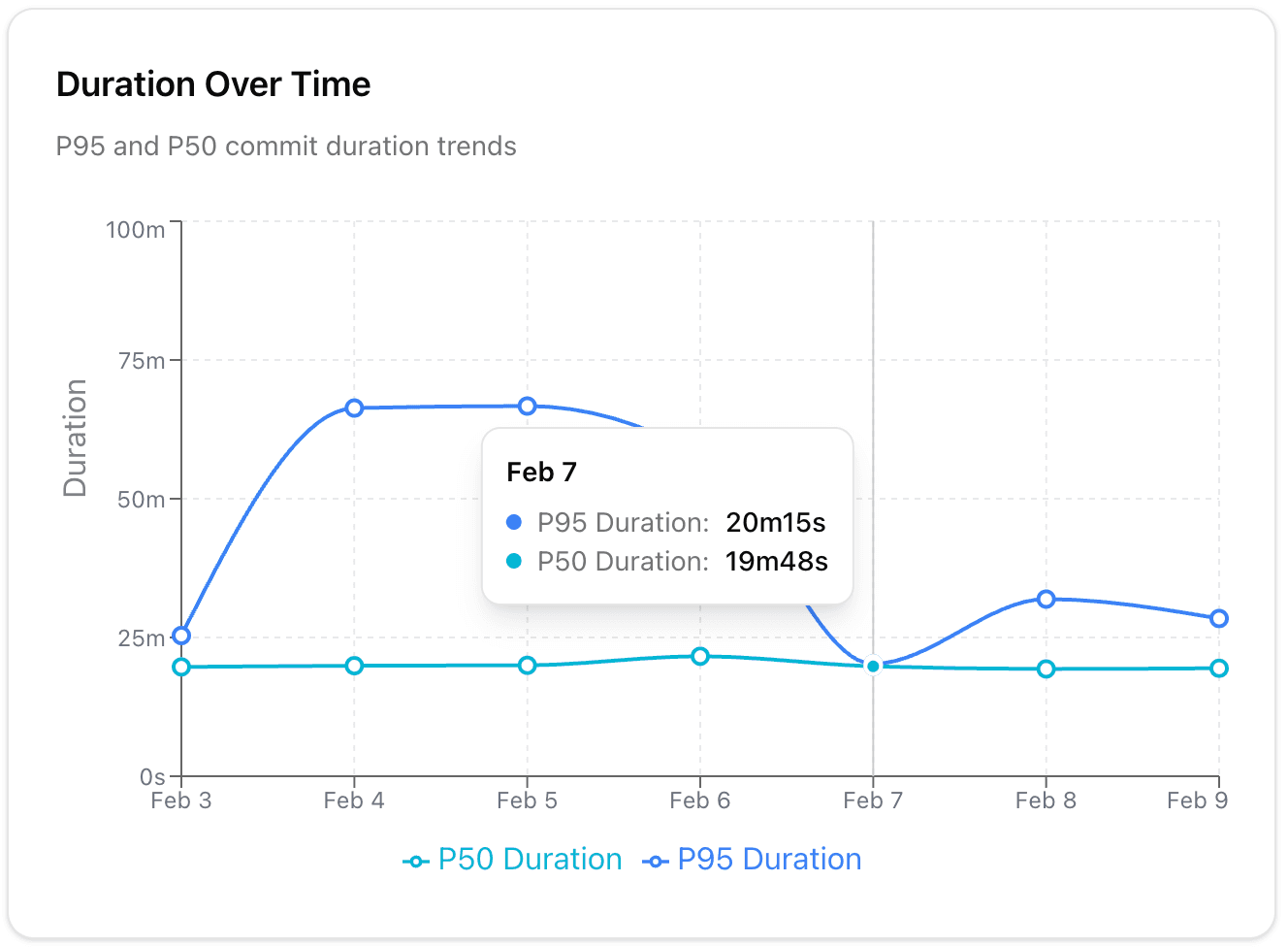

Detecting and tracing flakes. The agent correlates errors with code changes. When it sees a test failing intermittently, it traces the flake back to its origin: which commit introduced it, which infrastructure condition triggers it (sometimes it's infra slowness or side effects, not code). This is the part that takes a human the longest, and where the agent adds the most value.

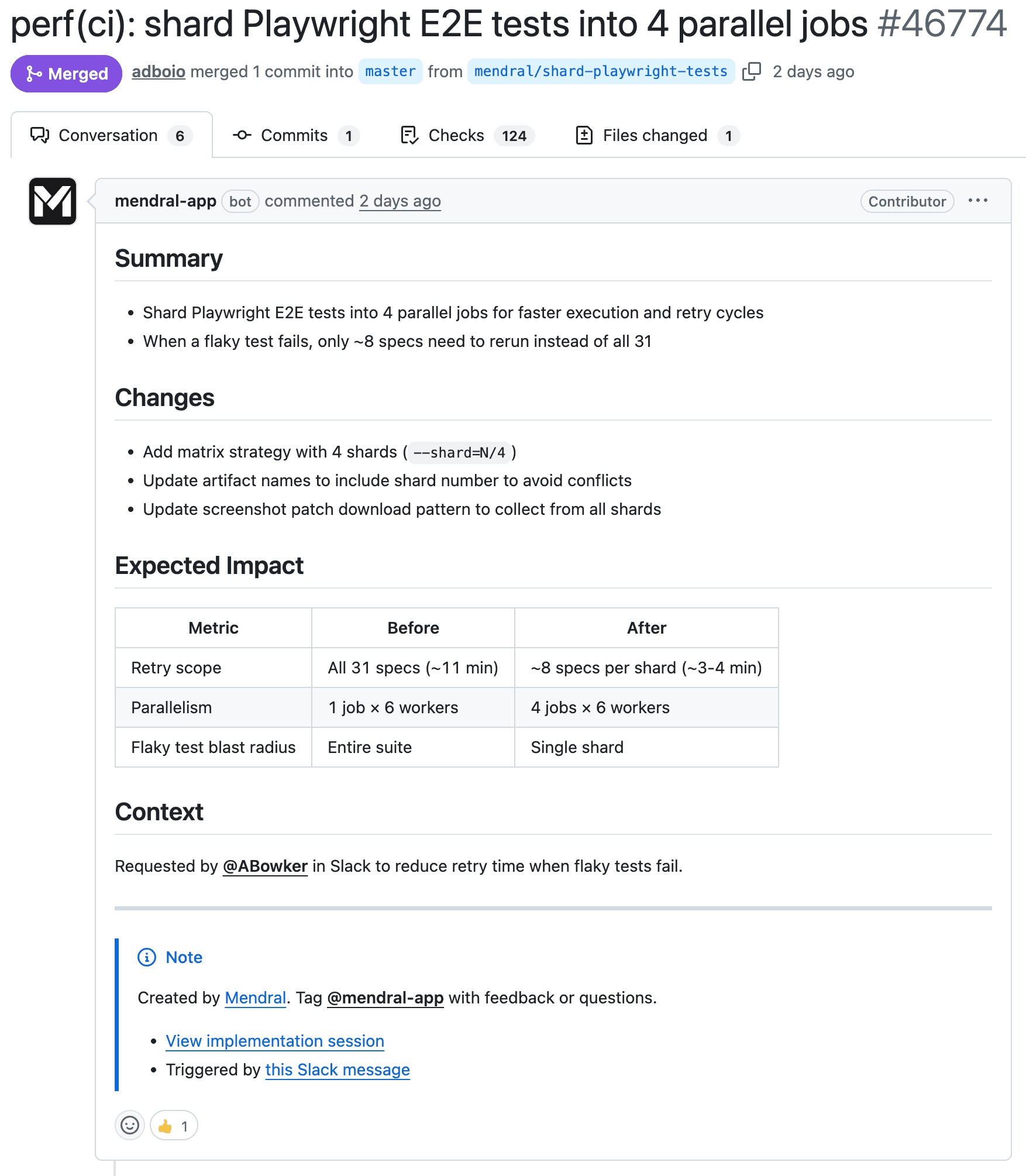

Opening PRs with fixes. When the agent identifies a flaky test and has enough confidence in the diagnosis, it opens a pull request to quarantine or fix it. Because PostHog's repo is public, these PRs are visible to anyone. You can go look at them right now. The agent iterates on the PR based on review comments, just like a human would.

Acting as a team member on Slack. The agent joins Slack and behaves like a team member. When there's a CI failure, it doesn't broadcast to a general channel. Every member of the PostHog team linked their account to the Mendral dashboard, so the agent knows who to involve. If your commit likely caused the failure, you get a direct message. If it's a known flake, the agent handles it without interrupting anyone. This was something the PostHog team pushed us to build better, and it's become one of the most impactful features.

Continuous analysis. The agent doesn't just react to failures. It analyzes all commits, all CI runs, and all logs continuously. It builds a picture of the repo's health over time, identifies patterns, and surfaces insights proactively.

Four things that surprised us building a CI agent at scale

A few things that surprised us:

Log ingestion is the hard problem. Everyone focuses on the AI/diagnosis part, but the bottleneck is actually getting billions of log lines indexed and searchable fast enough to be useful in real time. If your agent can't search logs in seconds, it can't diagnose anything before the engineer has already context-switched to investigate manually.

Flaky tests are rarely random. Almost every "flaky" test has a deterministic root cause, just one that's hard to find. Timing dependencies, shared state between tests, infrastructure variance, order-dependent execution. The agent is good at this because it can correlate across hundreds of CI runs simultaneously, something a human wouldn't have the patience to do.

The routing problem is underappreciated. Knowing who to notify about a failure is almost as valuable as knowing what failed. At PostHog's scale, a failure notification going to a general channel means 98 people glance at it and 97 ignore it. Having the agent figure out who actually needs to look at it, based on the code change and the failure signature, removes a lot of noise.

Working on a public repo keeps you honest. Every PR our agent opens on PostHog's repo is visible to anyone. This has been great for us because it forces total transparency. You can see exactly what the agent is doing, how it reasons about failures, and what fixes it proposes.

The bigger picture

We think the CI challenge is going to grow for most teams, not shrink. AI coding tools (Cursor, Copilot, Claude Code) are increasing the volume of code changes. More changes means more CI runs, more potential failures, more flaky tests surfacing. The delivery pipeline becomes the bottleneck.

PostHog is a good example of what a well-run, fast-moving team looks like at scale. They have 22,477 tests with a 99.98% pass rate, ship 65 commits to main daily, and keep 98 engineers productive on a single monorepo. That's impressive engineering. Our job is to help teams like theirs stay fast as the volume keeps growing.

We got lucky onboarding PostHog early in our YC batch. Their scale pushed the limits of our agent fast, in ways we couldn't have simulated ourselves. It forced us to solve real problems at real volume from day one. A big thank you to Tim for his trust and support, and to the whole PostHog team for working with us in the open on a public repo and giving us the feedback that's shaped a lot of what Mendral does today. Having spent a decade building and scaling CI systems ourselves, starting at Docker in 2013 when the problem was already hard, it's been exciting to finally build the agent that automates the work we used to do manually.

We're Sam and Andrea. I was the first hire at Docker and later VP of Engineering; Andrea wrote Docker's first commit. If your CI looks anything like this, we'd love to look at your numbers.